Why not solar energy on earth ??

Space is hard to get to. Why not just power server farms from solar cells on earth?

Simply put, massive habitat destruction.

|

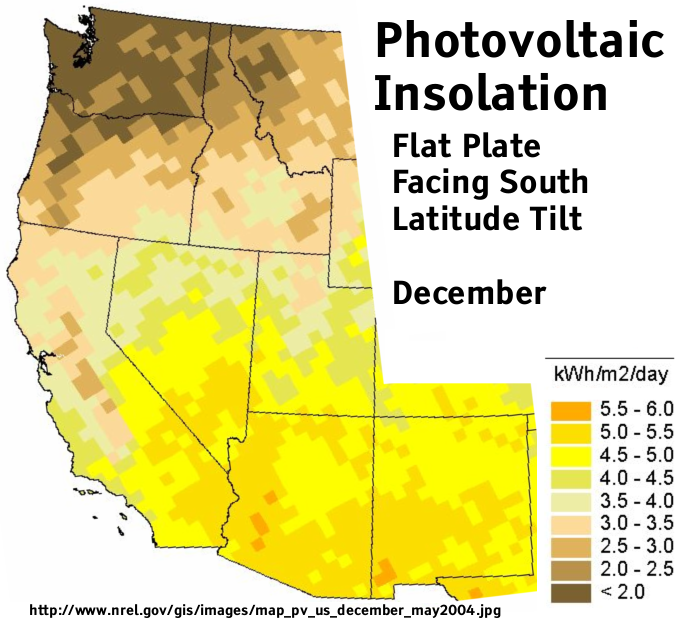

174,000 Terawatts of solar energy hits the Earth. Some is reflected, some is absorbed by clouds. The energy actually reaching the surface of the earth (averaging 120,000 Terawatts globally) is called insolation, and is usually measured in kilowatt hours per m² per day. |

Actual experience is worse. The Agua Caliente Solar Project in Arizona occupies 2400 acres, 9.7 km², and will produce as much as 400 MW at summer peak (at the plant boundary, not to the customer). But that is under 1000 W/m² daytime insolation, not the December average of 208 W/m² . Manufacturing, power storage, and grid transmission cuts that in half. So ( 400 MW/9.7 km² ) * 0.208 * 0.5 = 4 W/m².

This is a far cry from the 1367 watts per square meter in space. On that basis alone, it is cheaper to orbit the data centers.

But wait, there's more. Oil may or may not be running out, but with the United States burdened by a huge international debt, and growing economies like China demanding a larger share of world production, we may soon be unable to import foreign oil, and forced to develop an electric transportation infrastructure. The total energy use of the United States and Canada averages 4 terawatts, mostly for transportation. 4 TW divided by 9 MW/km² is 450,000 km², or 210,000 square miles, bigger than New Mexico, somewhat smaller than California. Can you imagine how much destroyed habitat that represents?

The deserts of the southwest US are not lifeless - they are simply too arid to support agriculture. Desert soil is full of microbes forming a biologically active crust (it goes crunch under your hiking boots); the bio-productivity can be as much 100 grams of sequestered carbon per year per square meter in the Mojave, which is like 370 grams of CO₂. That is 370 tons of CO₂ per year per square kilometer, 180 million tons of CO₂ per year for 500,000 square kilometers. Note that US CO₂ emissions are currently 5.5 billion tons.

100 g/m² carbon uptake is the annual productivity - the desert soil stores as much as 2 kg/m² of carbon, most of which will also release into the environment as the soil dies.

Economics: A solar cell panel designed to survive outdoors for 25 years is a far more complicated object than a patch of paved road. The entire interstate highway system is 75,000 kilometers long. Assuming an average paved width of 100 feet, 30 meters, the area of the system is 2250 km². Over the last 50 years, the system cost 130 billion dollars to build, or about $60M/km². If the solar cells, converters, energy storage, and transmission systems cost only as much per square kilometer as the interstate highway system, the whole thing would cost 23 trillion dollars.

Imagine how much it would cost to keep the interstate highway system optically transparent ...

Again, Agua Caliente gives a more realistic estimate of cost; $1.8 billion for 42 million December customer watts, 170 trillion dollars. And that is just for the plant, not the transmission lines and power storage.

Effects of Destroyed Habitat

Compared to climax forest or prairie grassland, agricultural land sequesters much less carbon. Agricultural land use changes was the main cause of anthropogenic CO₂ runup until the mid 20th century, and was especially widespread before the 1500s, when old world diseases wiped out American Indians and their forest-destroying slash-and-burn agriculture.

see:

Alternative: Server Sky

If some tiny fraction of that enormous cost was spent on research to get thinsat thickness down to 5 microns, reducing the weight to 300 milligrams, and reducing launch costs to 1000 dollars a kilogram, then the cost of orbiting a 5 watt, 85% availability thinsat would be 30 cents. Orbiting 4 trillion watts of thinsats would cost 280 billion dollars.

Development would not stop there, of course. A system such as the launch loop could loft thinsats for much less. Launch costs would essentially be free, relative to manufacturing cost. If thinsats were made of lunar silicon, and used for space solar power, they would be far heavier (perhaps 500 microns thick with coverglass, and 30 grams), but "closer" to deployment in the LaGrange positions, from electrically-powered lunar launchers. The environmental cost would asymptote towards zero, and the system would provide its own launch energy.

The only thing holding us back from an abundant energy future is faint-heartedness. This will get done, the only question is whether Americans have the courage to participate.